Only the imagination grows out of its limitations.

In the example shown in the previous post I used 20 iterations at 512×512. A few lingering questions that might be asked are…

- What about more iterations?

- What about a lower resolution, like 256×256 ?

- Machine learning code typical initializes using random parameters will this affect the image in another identical run ?

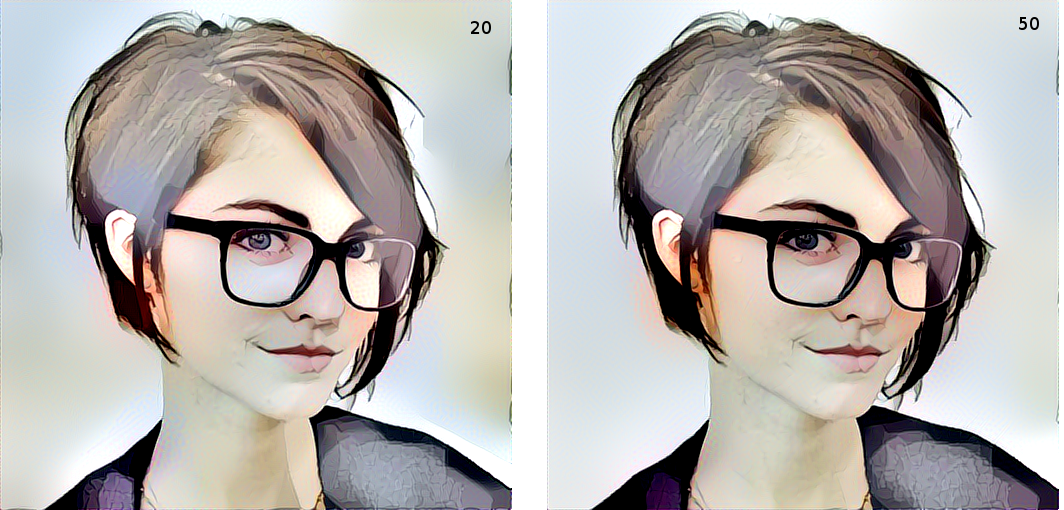

20 Iterations and 50 Iterations

More iterations up to a point make for a better image. There is a point where the loss value deltas get smaller between iterations and a point of diminishing returns is reached. Not much difference that can be seen happens beyond 20 iterations for this run. There are minor details that have changed but you have to really look carefully to pick them out.

Start of iteration 0

Current loss value: 1.68853e+11

Iteration 0 completed in 672s

Start of iteration 1

Current loss value: 1.06826e+11

Iteration 1 completed in 616s

Start of iteration 2

Current loss value: 7.61243e+10

Iteration 2 completed in 594s

Start of iteration 3

Current loss value: 5.69757e+10

Iteration 3 completed in 501s

Start of iteration 4

Current loss value: 4.73256e+10

Iteration 4 completed in 496s

Start of iteration 5

…..

Start of iteration 9

Current loss value: 3.22461e+10

Iteration 9 completed in 498s

……

Start of iteration 19

Current loss value: 2.63259e+10

Iteration 19 completed in 471s

…….

Start of iteration 49

Current loss value: 2.26513e+10

Iteration 49 completed in 592s

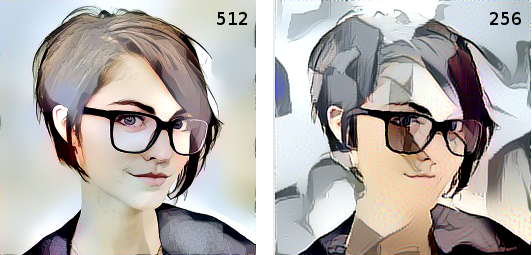

Lower Resolution

The model will perform poorly on lower resolutions, even with 20 iterations, 256×256 will look sloppy and abstract. The only reason to go this low would be to run a bunch of iterations fast to see if it worth trying at higher resolution. Kind of like a preview. On my machine the 256×256 iterations run about 5x faster than the 512×512 iterations.

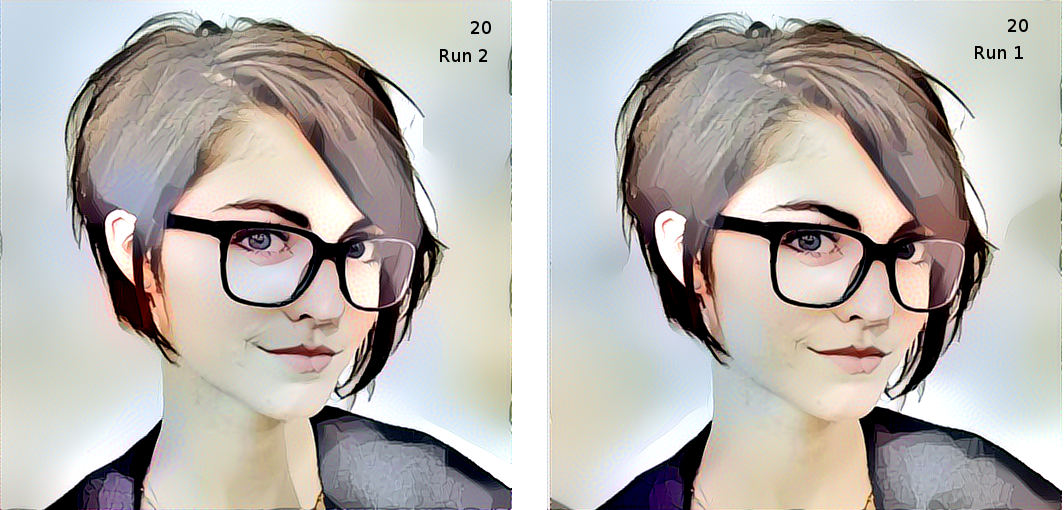

Random Initialization

Because the machine learning model loads itself with random weights and biases at the start of a model run and works from that as a starting point there is some variations in the results from run to run. This can be seen in theses images as there are slight variations in the results. Sometimes it is worth running the model over and over and then hand picking the best result from a batch of outputs.

As an aside. In some machine learning code it is possible to seed the random number generator so that the random starting point is not really random but seeded to be able to reproduce the same results. Occasionally I have had to do this when training genetic algorithms for trading, hand built code, so total control on my part. I basically want a reference run against which I can gauge future changes to the code against. By using a standard set of input, a fixed time series and seeded random initialization, I get the same tuning every time. Then if a change happens in the code, I know it is a code change and not in the data. Have a reference copy archive makes it reproducible.